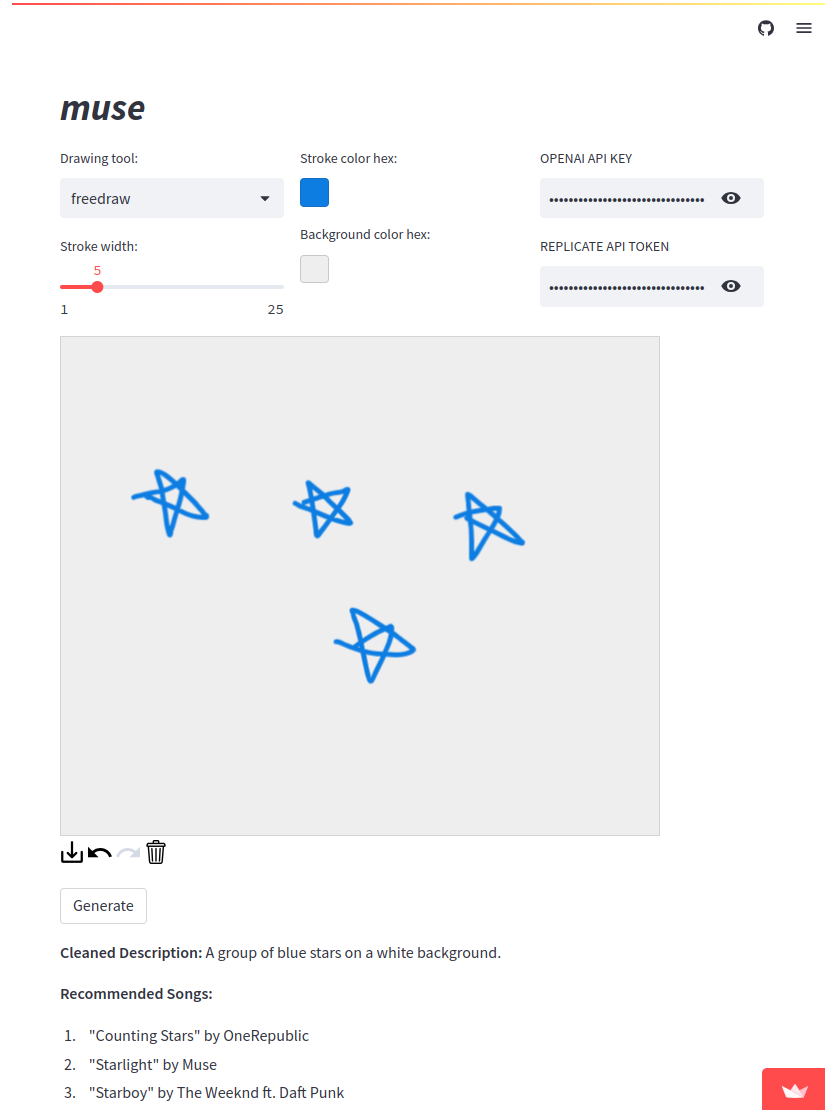

AI models have become pretty powerful and it's really easy these days to take very high level ideas to working prototypes. One idea I played around some time back with my partner unnu was to make a party game where a program generates music playlists from sketches. We use this to explore music in a playful way specially in the following two patterns:

- Draw the right image to find a particular song, say, ‘yellow submarine’. Kind of like playing pictionary with machine assistance.

- Draw anything, a mood, a picture, a vision, and discover the suggested songs you get.

There are two models, one for describing image, salesforce/bilp as of this

writing, and one for parsing and generating song lists, currently gpt-3.5-turbo.

There are surely many improvements to be done here.

The image describer is unaware of the context so it doesn't do really well on few categories of songs. For example, we weren't able to get 'Where the streets have no name' via this pipeline. I am assuming multimodal systems like GPT4 with images should do much better here. Secondly to have a proper search so that the listing is not cut off by LLM's data timeline. But it's still pretty usable without complicating things too much.

The app is accessible here1. The source code is here.

Footnotes:

Sorry you would have to provide your own keys. Replicate is used for image description model, OpenAI for parsing and generating songs.